KEDA (Kubernetes-based Event Driven Autoscaler) is an open-source project that brings event-driven scaling capabilities to Kubernetes. It allows Kubernetes workloads, such as deployments or jobs, to scale automatically based on the metrics or events coming from external systems.

KEDA works as an add-on to Kubernetes and integrates seamlessly with Horizontal Pod Autoscaler (HPA), providing a more dynamic and flexible scaling mechanism tailored to event-driven architectures.

Key Features of KEDA

- Event-Driven Scaling:

- Scales workloads based on external events, such as messages in a queue, database query rates, or custom metrics.

- Moves beyond traditional resource-based autoscaling (CPU/Memory).

- Multiple Scalers:

- Supports a wide range of event sources, including Kafka, RabbitMQ, Azure Service Bus, AWS SQS, Prometheus, PostgreSQL, and more.

- Can handle custom event sources via custom scalers.

- Seamless Integration:

- Works with Kubernetes-native concepts like deployments, stateful sets, and custom metrics API.

- Automatically integrates with Kubernetes’ Horizontal Pod Autoscaler.

- On-Demand Scaling:

- Keeps pods at zero (or minimal count) when there are no events, enabling cost-efficient idle states.

- Scales up pods only when triggered by an event.

- Simple Configuration:

- Configure scaling behavior through Kubernetes custom resources (e.g.,

ScaledObjectandScaledJob).

- Configure scaling behavior through Kubernetes custom resources (e.g.,

- Platform-Agnostic:

- Supports on-premise Kubernetes clusters, managed services like AKS, EKS, and GKE, and hybrid environments.

How KEDA Works

- Custom Metrics API:

- KEDA implements Kubernetes’ Custom Metrics API to expose external metrics as triggers for autoscaling.

- Core Components:

- Operator: Manages the lifecycle of custom resources (

ScaledObjectandScaledJob) and interacts with Kubernetes HPA. - Metrics Server: Collects and exposes metrics from external event sources to Kubernetes.

- Operator: Manages the lifecycle of custom resources (

- Scaling Objects:

- ScaledObject: Defines how a Kubernetes workload should scale based on an external event source.

- ScaledJob: Configures how jobs should scale dynamically based on event triggers.

Use Cases

- Message Queue Processing:

- Automatically scale consumers of message queues like RabbitMQ, Kafka, or Azure Service Bus based on the number of messages in the queue.

- Serverless Event Handling:

- Build event-driven applications that remain idle (zero pods) when there are no events.

- Database Query Load:

- Scale workloads based on metrics like row count in a database table.

- IoT and Streaming:

- Scale workloads to process real-time data streams from IoT devices or Kafka topics.

- Custom Metrics Scaling:

- Scale based on custom business metrics exposed via Prometheus or other monitoring tools.

Architecture

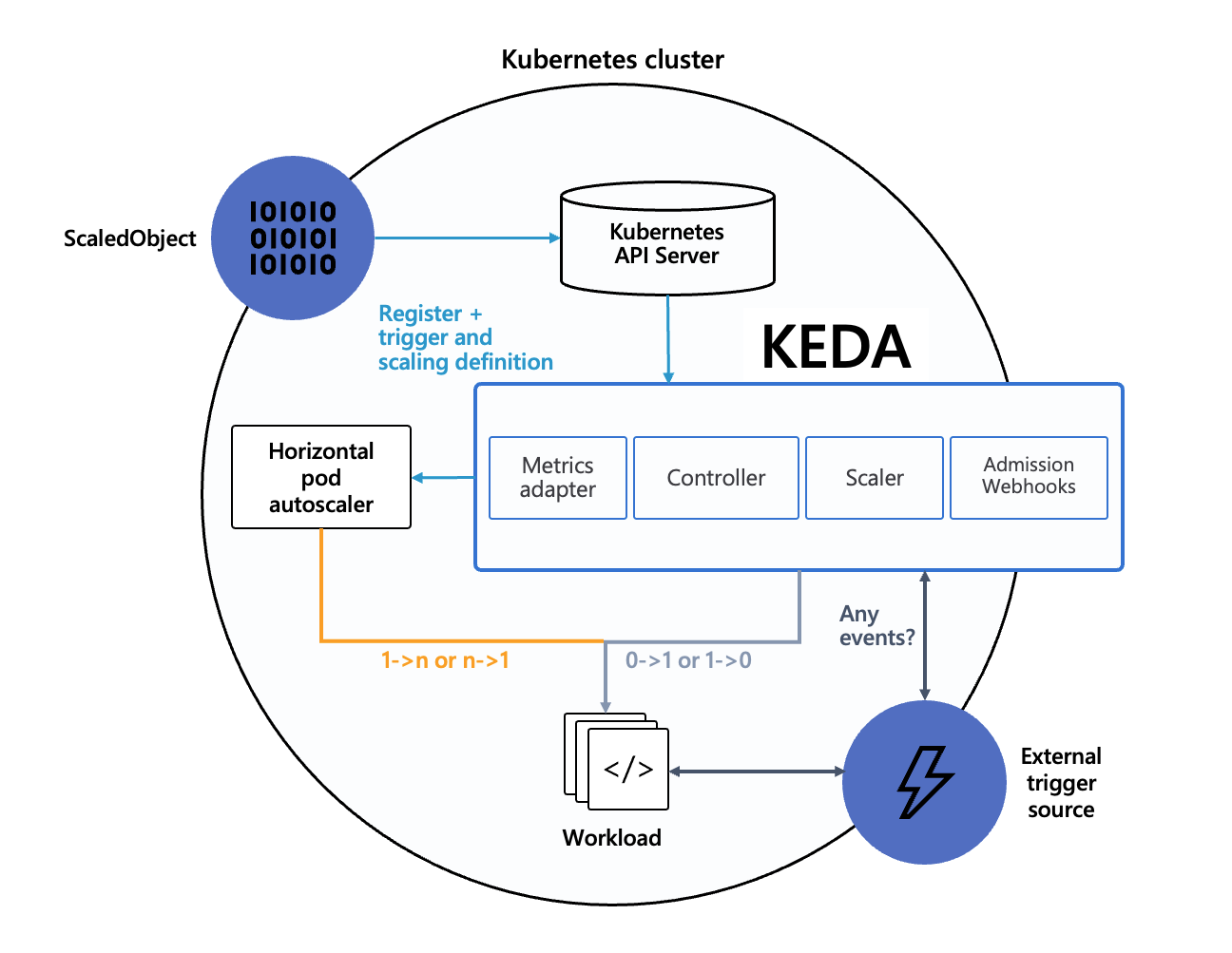

KEDA’s architecture and functionality:

KEDA Architecture Diagram:

- This diagram provides an overview of how KEDA integrates with Kubernetes components, external event sources, and the Horizontal Pod Autoscaler (HPA).

Example Configuration

Here’s an example of how to scale a deployment based on the number of messages in a RabbitMQ queue:

Deployment:

apiVersion: apps/v1

kind: Deployment

metadata:

name: worker

spec:

replicas: 0

selector:

matchLabels:

app: worker

template:

metadata:

labels:

app: worker

spec:

containers:

- name: worker

image: worker-image

KEDA ScaledObject:

apiVersion: keda.sh/v1alpha1

kind: ScaledObject

metadata:

name: rabbitmq-scaler

spec:

scaleTargetRef:

name: worker

minReplicaCount: 0

maxReplicaCount: 10

triggers:

- type: rabbitmq

metadata:

queueName: my-queue

host: amqp://guest:guest@rabbitmq-host

queueLength: "10"

scaleTargetRef: Refers to the workload to scale (e.g., deployment, stateful set).triggers: Specifies the external event source and scaling conditions.

Advantages of KEDA

- Cost-Effective: Automatically scales down to zero, reducing resource costs during idle times.

- Event-Driven Architecture: Suited for modern applications where scaling is based on external events rather than resource usage.

- Extensible: Easily supports new event sources via custom scalers.

- Simplified Operations: Declarative configuration through Kubernetes custom resources.

When to Use KEDA

- Event-Driven Workloads: Your application processes events or metrics from external systems.

- On-Demand Scaling: You want workloads to scale up only when needed and scale down to zero when idle.

- Diverse Event Sources: You need to scale based on non-traditional triggers, such as queue length or database metrics.

KEDA enables developers to build efficient, responsive, and cost-effective applications by seamlessly integrating event-driven scaling into Kubernetes environments.